HDR - An Introduction

What is HDR? Why does it matter? Will it last? And how should we handle it? Kevin Shaw casts some light onto the brights and darks of this tech

January 2017 Note: This article has been updated January 2020. Read the new version…

We’ve been hearing about ‘HDR’ since 2014, and it’s clearly an important and much debated development in the industry. Rather confusingly, HDR has come to mean two quite different things:

1. The use of multiple exposures merged together to capture a wider dynamic range than is usually possible, or

2. The use of a display with significantly brighter whites and, usually, deeper blacks to display a higher dynamic range than the current standards (rec 709 and DCI P3).

Clearly, some clarity is needed. The first definition – HDR capture – is also known as Photo HDR or HDR imaging (HDRi); this article won’t discuss that. Instead, we are going to look at HDR displays – in particular, why they are here, how they are different and why they are better.

Terms like HDR are relative and often change as technology advances. For example, “HD” has frequently been used to describe new standards. When the old black and white 405 line broadcasts were replaced by 625 line color, the new technology was described as “high definition”, but it has of course been used for many more recent shifts.

But before discovering exactly what HDR is, there are some other persistent myths to dispense with.

HDR isn't a fad. It is a technology shift and it is here to stay

HDR is not painful or tiring to watch. This is a subjective statement, like saying that 5.1 audio is too loud. It might be true in individual cases, but there will always be good and bad examples.

There is no single standard for HDR and probably never will be. HD doesn't have a single standard either so there's nothing new in that.

Increased brightness on its own doesn't equate to HDR. For example, a BT.709 image displayed at 1000 nits would not be HDR, and would look terrible. HDR is an entirely different format, not just a display technology.

HDR isn't dependent on 4k resolution, wider color gamut or any other improvements that are bundled with it. The requirements for HDR are images with a minimum 10 bits, and a display with enough dynamic range that can handle HDR formats.

HDR is here to stay

HDR did not come about because the film and television industry demanded it. There is a wider market that drove its adoption and now it's unlikely that suppliers will want to continue to manufacture both SDR and HDR displays. There are plenty of similar historic circumstances where one technology won out.

1990s - CRTs were replaced with LEDs, plasmas and technology that wasn't as good for television. Why? Because CRTs are bulky and won’t fit in in a laptop. We have persevered to maintain standards and today's screens are excellent, but that was not always the case.

2012 - 4k screens were introduced, despite few in our industry advocating them as superior. There is justification for 4k capture – but much less for the deliverable. Even the consumer press acknowledges that there is little benefit for domestic use. However, the technology is needed by the computer and games industries to put bigger GUIs and more windows on the screen. So 4k is here to stay and again we have adapted to it.

2016 - Screens are getting brighter and brighter, to the point that ordinary BT.709 content, which is mastered for 100 nits inherited from the characteristics of the CRT, doesn't look right. But the phone and tablet industries need brighter screens to make their displays readable in daylight and in areas with high ambient lighting. In addition, research suggests that HDR displays are preferred by all audiences - a preference not shared for stereoscopic and 4k. Manufacturers will eventually stick with the brighter screens and not offer anything as low as 100 nits (most TVs are brighter than that these days anyway)

So what does this latest change mean? The importance of HDR is often likened to the introduction of HD, but actually the shift from black and white to color is a closer analogy.

The adoption of HD required upgrades throughout post-production, but in many ways the workflow is the same as that of SD. The HD specifications were mostly based on SD. There was an opportunity to adopt a single standard in the transition, but the reality was that we gained many more standards.

The shift to color on the other hand was less about the technology and more about aesthetics. B/W content could remain B/W and be appreciated for that, but global, audiences globally preferred color and as soon as it was technologically possible that became the norm. The shift caused dramatic changes to costumes, makeup and of course color-grading amongst other things. The change from B/W to color was more creative than technical and so it is with HDR. Both are made possible by technology, but a focusing on the hardware misses the point.

"Shooting for HDR" seminar for GTC at Dolby Europe

Watch the video

In fact, the biggest problem is trying to demonstrably explain the concept of HDR. No words can do it, nor can diagrams or images on a display that is not HDR. It is like trying to explain why a color photograph is better than the black and white version. Sometimes the black and white might look nicer, but usually people will say that the color image is more life-like and has more detail. Even showing HDR and SDR on the same display , or on side- by- side displays can be confusing, because the human eye can’t adjust for both at the same time. The best way to understand HDR is to look at a perfectly good SDR image on an SDR display and then see a properly mastered HDR version on an HDR display in the same viewing environment. If you are a colorist with access to the appropriate monitoring, you can take this experiment one step further. Grade the SDR version as you normally would, then start again and grade it on the HDR display at a minimum of 1000 nits.

The problem with comparisons is that the SDR display on its own can looks great.…until you put an HDR display next to it. Then it appears dull and flat because the human eye adjusts to the increased dynamic range. I’ve demonstrated this experiment to audiences both in front of monitors and with projectors in a cinema. The result is the same every time. HDR looks better – and if it doesn’t, it is usually due to the grade and not the format.

Improvements to the viewing experience

The importance of HDR

Since the introduction of quad HD (aka 4k or UHD) in 2012 there has been significant research into how to improve the viewing experience. Until that time, it was generally assumed that more pixels was always better. However, our industry – and soon afterwards the consumer critics – soon realised that there was little improvement from 4k resolution for the home. The disillusionment that followed caused the EBU and others to reconsider the future of viewing.

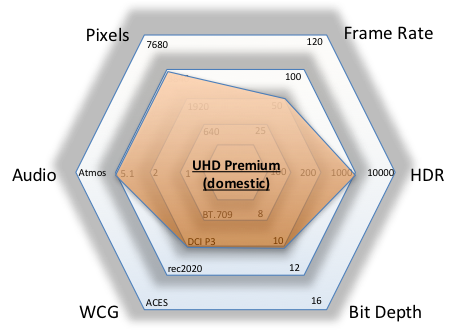

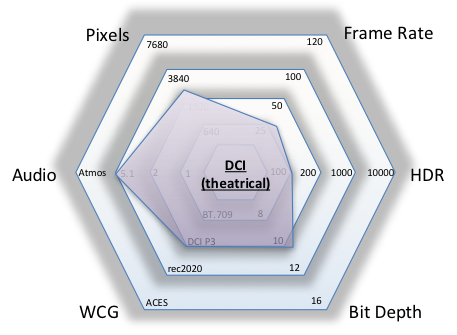

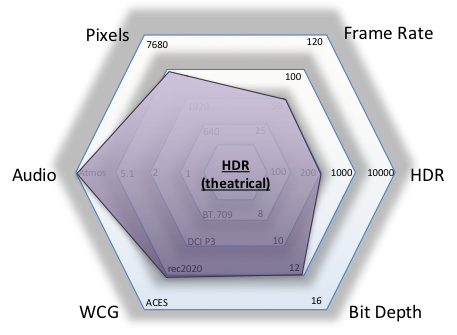

They identified six areas of improvement: resolution, higher frame rates (HFR), wider color gamut (WCG), higher dynamic range (HDR), better color depth and more immersive audio. Of these, tests showed HDR as the most popular fix, the cheapest to produce and among the easiest to implement. There is a seventh consideration, however: compression. 4k pixels needs 4x times the data, leading to better or more aggressive compression. Better compression techniques help, but there is a real fear that technically better images might look worse in the home after heavier compression. 4k resolution represents a 400% increase in data for little gain, whereas HDR is only about a 20% increase of data and offers a huge improvement.

The recommended improvements can be shown on six axes and scaled with measurements that start at standard definition and finish at our highest expectations. In the case of color gamut for example that is the range of what we can physically see.

In the diagram our current HD television is represented on the left and UHD Premium on the right (UHD premium is already available in the home via streaming from Amazon Prime, Netflix and others).

Clearly this represents a big step forward and arguably challenges the benefits of theatrical presentations. Similar improvements are being introduced into cinemas, for instance Dolby Vision cinemas are 4k, HDR and WCG. There are over 70 of Dolby Cinemas in operation around the world and around 50 films already showing on them. The number of films available is expected to double in 2017.

The benefits of HDR

Before considering the reasons that HDR offers a better viewing experience, it’ is worth reiterating that there is no substitute for seeing it in the flesh. There are no before and after representations here, since they are meaningless unless seen on an HDR display.

Dynamic range is calculated by dividing the brightness of the brightest white by that of the darkest black. So, both raising the white value and lowering the black increase dynamic range. OLED displays can shut off the light and achieve very low blacks. This looks great but can be misleading: divide any brightness by 0 and the dynamic range is infinite, meaning quoted contrast values can be deceptive.

So, whilst small changes to the depth of the black are important, it is in fact significantly brighter whites that are more visually effective. The brighter whites are not applied uniformly across the program content, but instead used sparingly to separate reflective whites (such as paper, shirts , and paint) from spectral highlights and illuminants (such as lamps, windows, fire and sky). At any rate, no HDR displays can achieve maximum brightness across the whole display area –, for both practical and economic reasons. But the additional highlight detail adds a sense of realism and depth to an image.

The increased dynamic range is perceived as more having more contrast, which makes images look sharper – meaning that HDR has more effect on perceived sharpness than 4k. That doesn’t mean that all content must use the full dynamic range, but it does create more choices. With HDR, you can have dark content without it being flat.

Blue skies from the documentary "Namibia"

The increased brightness improves the color palette too. The limited brightness of standard dynamic range (SDR) restricts the vividness of colors. All RGB systems achieve maximum white by setting the red green and blue values to maximum, which means that there can be no saturation. For example, at 100 nits (normal BT.709) a blue sky shifts to cyan or de-saturates as it brightens because once the blue gets to maximum brightness the red and green channels continue to increase. In HDR the blue can be brighter and remain both pure and saturated. This is also why HDR needs at least 10 bits to avoid banding.

HDR standards

For years now televisions have been getting brighter and no new standard was needed, so why change now? Well, our current standards for both television and cinema are based on gamma. We typically make 10 bit masters (1024 steps), where black is at code value 0 and white is at 1023. There are legal range and full range specifications but the concept is still valid. Over the years we have adopted 100 nits as the television standard and 48 nits (14ft lamberts) for the cinema.

The lower brightness in cinema is offset by the screen size and the low ambient surround. Our BT.709 mastering for television is based on the response (technically the electro-optical transfer function or EOTF) of a CRT display because for a long time that was the only display. With LED, Plasma and OLED screens it was necessary to define the CRT look more precisely and gamma was added to the rec709 standard in 2011 with ITU-R BT.1886. Even our DCI cinema mastering is based on gamma.

BT.709 displays on a range of devices of different brightness

The gamma curve approach works well for a range of display brightness and viewing conditions but beyond about 500 nits it starts to fail - especially in brighter areas - and images begin to display too much contrast. Interestingly, we use log curves to capture wider dynamic range in digital cameras. Log curves are based on film negative exposure rather than CRT displays. Log material looks almost normal on the new brighter displays but as displays get brighter the shadows from the log images look flat or lifted.

Dolby pioneered a new approach by studying how much more light is needed to perceive a “just noticeable difference” (JND) by a human audience across a range of brightness levels up to 10,000 nits. The result is the perceptual quantization (PQ) EOTF, which was ratified as ITU-R BT.2084 in 2014. The PQ curve is different from gamma in a very fundamental way. Gamma ignores display brightness, or more accurately it assumes a low maximum brightness of about 100 nits. The PQ curve on the other hand is absolute so displays of different maximum brightness need a different signal – either a new trim grade or an intelligent tone map to the correct levels. Content mastered up to 4000 nits will clip on a 1000 nit display.

BT.2084 (PQ) maintains the brightness of the image regardless of the display device

There are three approaches to the clipping problem.

Dolby Vision

The ideal solution is to grade on the brightest display available, currently the 4000 nit Dolby reference display, and then make at least one scene by scene trim pass for levels down to 100 nits. The Dolby workflow uses a “Content Mapping Unit” (CMU) to display a BT.709 output using the metadata from these trims. Dolby Vision monitors use licensed technology to interpret and display Dolby Vision content. Manufacturers such as Sony, LG and Vizio support it. One significant advantage of the Dolby Vision license is that all screens use the same technology, so for the first time in our history there should be no difference between different makes of display and perhaps most importantly, viewers are likely looking at something closer to the graded master than ever before.

HDR10

The PQ curve is used by other companies who choose not to license Dolby Vision, most commonly as HDR10. HDR10 uses the same PQ curve as Dolby Vision but with static metadata, making a single adjustment over the whole program. This sounds like it compromises image quality, but most HDR10 masters are graded at 1000 nits, which is the UHD Premium standard, and then the static metadata is not needed.

HLG

The BBC were impressed enough by the early Dolby demonstrations to investigate HDR for broadcast. They quickly determined that the use of any metadata would be prohibitively complicated, especially for live events. Consequently, they developed an alternate approach known as Hybrid Log Gamma (HLG). As the name suggests, in simple terms, HLG uses gamma up to the point that it fails on HDR screens and then switches to a log curve. The result is that the log curve is not used in the shadows where it is weakest, and the gamma curve is not used in the highlights where it performs poorly.

There has been a lot of talk about one standard being better than the others and the need to pick one going forward. However, each has its application and ITU-R BT.2100 supports all the above standards. Developments in audio provide a good analogy for this: broadcasters typically transmit a stereo audio track, whereas cinemas and home entertainment markets now expect nothing less than 5.1. Some but not all of those will be Dolby encoded.

Fortunately, it is relatively simple to convert between HDR formats. The most practical approach for post-production is to start with a Dolby Vision master, ideally graded at 4000 nits, which requires scene by scene trims saved as metadata. From that the HDR10 static metadata can be derived, and an HLG conversion can be made. Starting with the HDR10 grade at 1000 nits may not reveal important changes at higher brightness levels. Sometimes in the trim pass, it is necessary to make changes to the master grade so that tone mapping to lower brightness works better. Those changes would be missed when starting with the HLG master.

The Academy of Motion Picture Arts and Sciences (AMPAS) has long recognised the problems of archiving and workflow management in a world where standards change increasingly quickly. For example, there are many movies shot on film that were first mastered on a telecine for television, remastered for HD and could be remastered for UHD Premium. Each new version is expensive and even then, it's not certain that the film experience has been preserved. To address this, AMPAS developed ACES - a file format, color-space and complete workflow that preserves the full information of the capture device even during post-production. Display formatting is done by a series of output transforms that make it relatively easy to export different standards from an ACES master. ACES is open source, which is preferred by archivists and in line with Open Archival Information System (OAIS) recommendations. The six axes chart gives an idea of how future proof an ACES project is! ACES is a container with enough bit depth and dynamic range to master even at 10,000 nits, it is a color space that covers the whole visible spectrum and is independent of frame rate, pixels and resolution. There is also an open support forum.

More about the part ACES plays in the second of these articles "HDR and Post-Production".

Learn more about grading HDR in the class ACES, UHD, HDR and WCG for colorists. For other open training classes with Kevin, check his training schedule.

© Kevin Shaw 2017

Kevin Shaw C.S.I. has over 30 years of experience as a colorist, and works all over the world grading feature films, commercials and TV shows. He has been teaching colorists for over 2 decades, created the da Vinci Academy in 1998, and co-founded the International Colorist Academy in 2009 and co-founded Colorist Society International in 2016.